Sign up to receive latest insights & updates in technology, AI & data analytics, data science, & innovations from Polestar Analytics.

Editor’s note: Probably one of the first names that come to your mind when you hear the word “cloud”, - Welcome to this deep dive into the world of Amazon Web Services (AWS). This blog series promises to give you an all-in-one overview of AWS – from its core offerings to its latest innovations and real-world applications. We'll break down the complex into insights that can help you achieve your technological ambitions.

Amazon Web Services (AWS) has emerged as a powerhouse, setting new benchmarks in scalability, innovation, and efficiency. Established in 2006, AWS has since catapulted into the forefront of the cloud industry, boasting a staggering market share and a client base that spans the globe.

A Brief Journey Through AWS's History

AWS's journey began with a vision to democratize access to cutting-edge computing power. From its humble beginnings, AWS has grown into an expansive ecosystem, encompassing a diverse array of offerings designed to meet the demands of every industry and application.

The Industry Giant

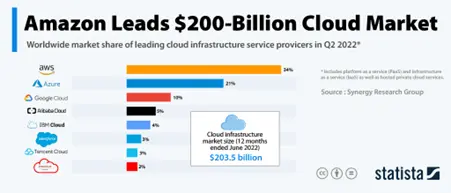

Today, AWS commands a lion's share of the cloud market, with an estimated 34% of the Infrastructure as a Service (IaaS) market worldwide.

What sets AWS apart is not only its size but its relentless commitment to innovation. From pioneering serverless computing with AWS Lambda to revolutionizing data storage with Amazon S3, its many cogs have consistently led the charge in redefining AWS cloud computing.

In this technical deep dive, we explore the core services, security measures, scalability solutions, and performance optimizations that have made AWS the go-to choice for businesses of all sizes.

Computer Services

Amazon EC2 provides a wide selection of instance types optimized to fit different use cases. From burstable instances for cost-effective, low-demand applications to memory-optimized instances for high-performance databases, EC2 offers unparalleled flexibility. Lambda, AWS's serverless compute service, allows for the execution of code in response to events. This paradigm shift eliminates the need for traditional server management, focusing solely on code execution and scaling.

Storage Services

Amazon S3 is famously claimed to deliver 99.999999999% (11 9's) durability and offers a variety of storage classes to suit diverse use cases. S3 Glacier, for instance, caters to archival needs, while S3 Intelligent Tiering automatically optimizes storage costs. EBS, on the other hand, provides low-latency, high-IOPS block-level storage, ideal for applications that require consistent and predictable performance.

Database Services

Amazon RDS supports a multitude of database engines, including MySQL, PostgreSQL, and Oracle, providing automated backups, patch management, and high availability. DynamoDB, a NoSQL database service, provides single-digit millisecond latency at any scale. Its seamless scalability and consistent performance make it a go-to choice for web and mobile apps that demand high throughput.

Machine Learning Services

AWS SageMaker simplifies the process of building, training, and deploying machine learning models. Its comprehensive toolset covers everything from data preparation and model training to deployment and monitoring. By providing a fully managed environment, SageMaker allows data scientists and developers to focus on model innovation rather than infrastructure management.

Data Analytics in AWS

Redshift, a fully managed data warehousing solution, leverages columnar storage and parallel query execution to deliver a high-performance AWS Data Analytics stack. It's particularly well-suited for complex analytical queries across large datasets. Athena, on the other hand, enables SQL querying of data stored in S3, eliminating the need for time-consuming ETL processes. AWS data analytics is a lifesaver for analysts as they can use Athena to swiftly extract insights from raw, unstructured data.

While the technical capabilities are impressive, cost optimisation in AWS is equally crucial. The AWS cloud computing stack provides a range of tools and best practices to help organizations optimize their cloud spending.

Pricing Models

AWS offers various pricing models to suit different usage patterns. On-demand instances provide flexibility but may not be the most cost-effective option for sustained workloads. Reserved Instances offer significant savings for predictable workloads, while Spot Instances provide access to spare capacity at lower prices.

Right-Sizing Instances

Selecting the right instance type and size is pivotal for cost optimization in AWS. Integrated tools like AWS Trusted Advisor and Cost Explorer analyze usage patterns and recommend appropriate instance types to match workload requirements.

Utilizing AWS Trusted Advisor

AWS Trusted Advisor is a powerful tool that offers personalized cost-saving recommendations based on your AWS usage patterns. It provides insights into areas such as underutilized resources, idle instances, and opportunities for reserved instance optimization.

Monitoring and Alerts

Setting up CloudWatch alarms and budgets helps organizations keep a close eye on their spending. By establishing thresholds and receiving alerts when costs approach predefined limits, businesses can take proactive steps to control expenses.

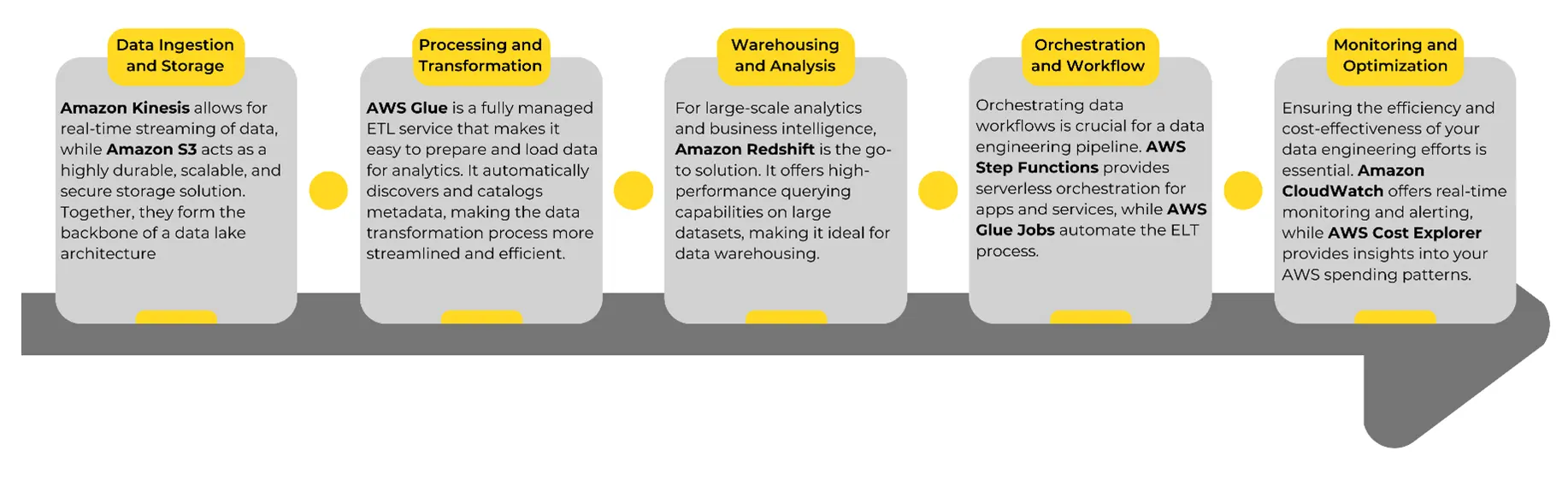

With AWS's robust suite of services, data engineering becomes an agile and powerful process. Let's delve into how you can embark on your data engineering journey with AWS.

Getting Started with Data Engineering on AWS

Now that we've covered the core components, let's take a closer look at how you can kickstart your journey into data engineering on AWS:

Define Your Data Sources:

Identify the sources of your data and plan how you'll ingest it into your AWS environment. Whether it's real-time streaming or batch processing, AWS offers a range of services to suit your needs.

Architect Your Data Lake:

Leverage Amazon S3 as the central repository for your data lake. Ensure proper bucket structure and naming conventions for efficient data organization.

Design Your ETL Processes:

Utilize AWS Glue to define and automate your ETL processes. This will streamline the transformation and preparation of your data for analysis.

Select Your Data Warehousing Strategy:

Depending on your analytics requirements, choose between Amazon Redshift and other suitable data warehousing solutions offered by AWS.

Orchestrate Your Workflows:

Use AWS Step Functions to create and manage your data engineering workflows. This will ensure that tasks are executed in the right sequence and dependencies are met.

Implement Monitoring and Cost Controls:

Set up Amazon CloudWatch alarms to monitor the health and performance of your data engineering processes. Use AWS Cost Explorer to gain insights into your spending patterns and optimize resource allocation. The above approach will help you establish a solid foundation for your data engineering ambitions on AWS.Data Visualization:

Amazon QuickSight provides extensive connectivity with diverse AWS data sources like Amazon Redshift, RDS, and S3, offering interactive dashboards and detailed analysis capabilities. Leveraging QuickSight's SPICE engine, it enables high-speed querying and visualization of large datasets. Utilizing QuickSight’s APIs and embedding options, users can integrate visualizations seamlessly into applications and portals, ensuring real-time insights for informed decision-making.

ML Modeling:

Amazon SageMaker integrates a plethora of machine learning tools, including built-in algorithms and frameworks like TensorFlow and MXNet. SageMaker facilitates hyperparameter tuning and model optimization through automatic scaling and distributed training across multiple instances, ensuring efficient model building and deployment. With its managed notebooks, developers can experiment and collaborate on model development within a secure and scalable environment.

Enterprise Governance:

AWS Organizations enable the hierarchical management of AWS accounts and policies, allowing fine-grained control over resource access and compliance. The service supports service control policies (SCPs) to enforce security and compliance standards across multiple accounts. Coupled with AWS Control Tower, which automates the setup of a well-architected multi-account environment, it provides guardrails and standardized configurations for consistent governance and compliance.

Scalability and Modernization:

AWS Glue automates ETL tasks, orchestrating data pipelines with its serverless architecture and schema evolution capabilities. With Glue's crawlers, it dynamically discovers and catalogs data sources, facilitating metadata management and transforming unstructured data into structured formats. Complementing this, AWS Lambda, with its event-driven architecture, scales seamlessly for processing data transformations and enabling a serverless computing paradigm, reducing operational overhead and optimizing costs.

In the dynamics of AWS cloud computing, its superior technical prowess, comprehensive service offerings, and relentless pursuit of innovation have elevated it to a league of its own. Its commitment to making the platform more business-friendly, versatile, and scalable has ensured customer success, coupled with a global infrastructure and an extensive partner network, making AWS the go-to choice for businesses seeking to leverage the full potential of the cloud.

About Author

Sports and Tech Enthusiast

In a world of opinions and cold numbers, data tells a compelling story.