Sign up to receive latest insights & updates in technology, AI & data analytics, data science, & innovations from Polestar Analytics.

Organisations today generate staggering volumes of data, be it customer engagements, IoT sensor readings, social media postings, or transaction data. Of enterprise data, 90% of enterprise data is unstructured, something that traditional relational databases simply aren't capable of processing at scale and with the variety it must.

Enterprises are facing challenges that most traditional storage struggle with, like:

These issues have caused a perfect storm in which organizations have huge data assets but no infrastructure to transform them into competitive benefits.

There is more to Azure Data Lake (mainly ADLS Gen2) than just storage!

Built on top of Azure Blob Storage, Azure Data Lake Storage Gen2 (ADLS Gen2) is a second-generation big data storage solution. The advantages of object storage and file system semantics are combined to create a single storage model.

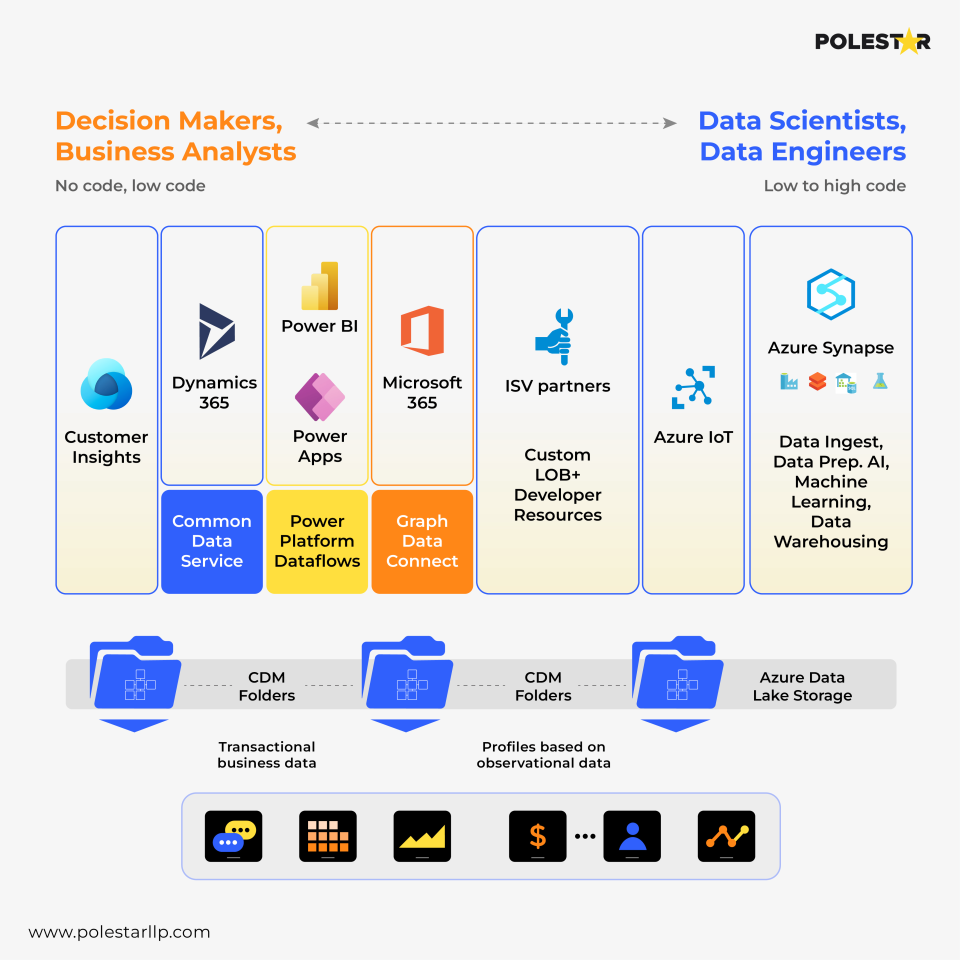

Petabytes of structured, semi-structured, and unstructured data could be stored, processed, and analyzed by businesses due to the tight integration of ADLS with other Azure services such as Azure Synapse Analytics, Azure Databricks, and Azure Data Factory. It maintains the performance needs for analytics and artificial intelligence workloads.

It shows a major shift from conventional storage to an ecosystem of intelligent data. The scalable foundation that allows AI/ML models to flourish is laid by this Azure Data Lake architecture, that offers enterprise-grade capabilities tailored for AI and analytics workloads. To fully utilise Azure Data Lake, it is imperative to comprehend its fundamental elements:

Foundational Intelligence Features:

Three layers in the Azure Data Lake architecture combine to convert unprocessed data into insights that can be use:

These components of Azure Data Lake enable organizations to implement medallion architectures where bronze layers store raw data, silver layers contain cleaned features, and gold layers house production-ready datasets optimized for specific AI models, ensuring both data quality and rapid model deployment!

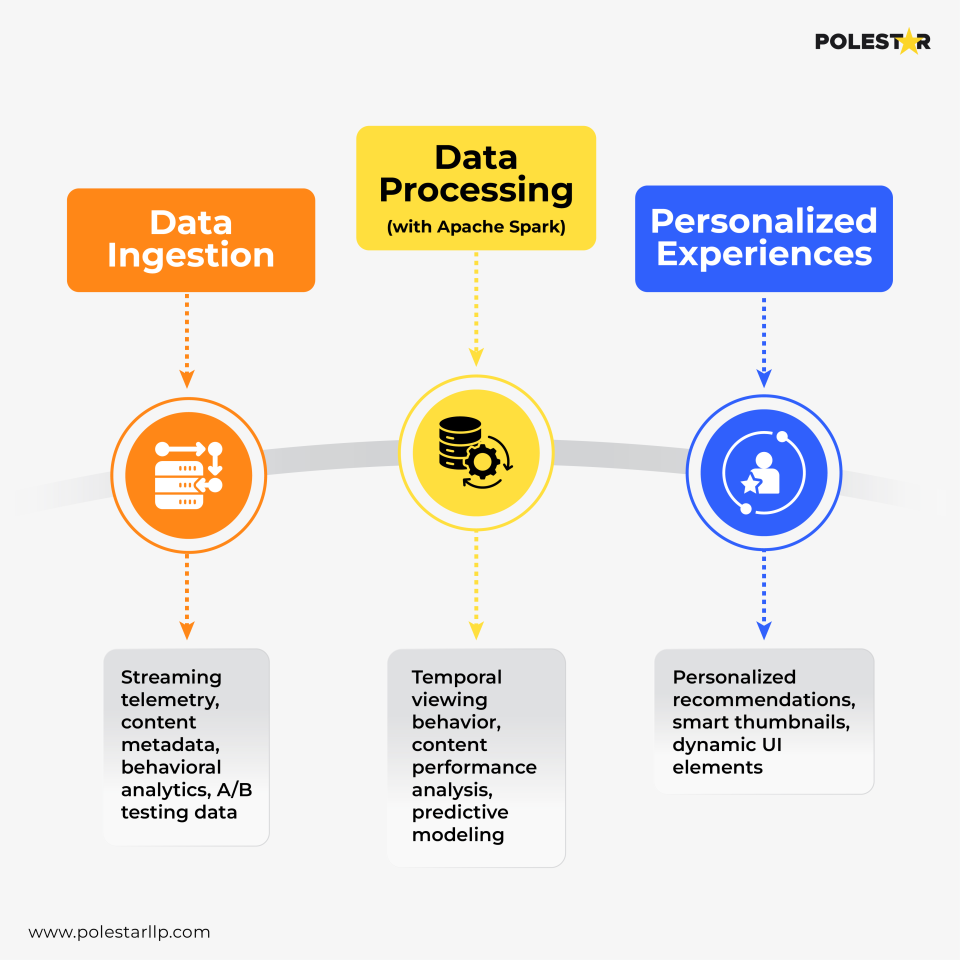

Prominent over-the-top platforms serve as prime examples of how Azure Data Lake solutions, with their advanced analytics, revolutionise entertainment experiences. To provide hyper-personalized content recommendations that increase engagement and retention, these platforms analyse billions of user interactions.

The Azure Data Lake enables streaming services to combine structured transaction data with unstructured behavioral data, developing recommendation engines that understand not just what users watched, but how they consumed content and their likely next actions. This level of intelligence transforms passive viewing into active engagement, dramatically improving customer lifetime value.

Modern retailers are revolutionizing customer experiences through AI-powered Azure Data Lake analytics that unify multiple data sources for intelligent automation. These comprehensive Azure Data Lake use cases demonstrate the platform's versatility:

| AI Application | Data Sources | ADLS Gen2 Role | Business Outcome |

|---|---|---|---|

| Personalized Recommendations | Clickstream, purchase history, browsing behaviour | Real-time feature store for ML models | Dynamic product recommendations |

| Inventory Optimization | ERP, RFID sensors, weather data, social sentiment | Predictive analytics data pipeline | Automated reordering reducing stockouts |

| Dynamic Pricing | Competitor pricing, demand patterns, seasonal trends | Real-time price optimization engine | Revenue optimization with margin improvement |

| Customer Journey Analytics | Mobile app, website, store interactions | Unified customer data platform | Omnichannel experiences increasing engagement |

Financial institutions leverage Azure Data Lake services to power AI-driven fraud detection systems that analyse transaction patterns in real-time, reducing false positives while catching fraudulent activities within milliseconds. Credit risk models process vast datasets including transaction histories, social data, and economic indicators to make instant lending decisions— transforming the customer experience while maintaining risk discipline.

Create data lakes with distinct areas for feature stores, model artefacts, and raw data. Use data versioning to facilitate A/B testing and model reproducibility, allowing for ongoing innovation and development.

Employ caching for frequently accessed features, partition data according to model training patterns, and use columnar formats such as Parquet for analytical workloads.

Implement automated data quality checks that validate training data integrity, establish model lineage tracking for compliance, and create access controls that protect sensitive training data.

Track model accuracy drift, data pipeline health, and resource utilization to ensure optimal performance and cost efficiency. Azure Monitor provides real-time visibility into model training progress, feature pipeline health, and inference latency.

The union of Azure Data Lake Storage Gen2 with analytics and AI is more than an upgrade in technology; it's a rethinking from the ground up of the way that organizations can leverage their data assets to enable competitive differentiation. By taking reporting out of its habitual context and creating smart systems that forecast, suggest, and automate, companies can realize value from Azure Data Lake never before possible.

Being a Microsoft Azure partner, Polestar Analytics has a rich history in deploying AI-driven Azure Data Lake solutions to various sectors. Our professional strength is in architecting Azure Data Lake that maps raw data into smart business capabilities, driving your organization's AI journey through trusted Azure Data Lake services.

Understanding Azure Data Lake's cost structure helps organizations optimize their AI investments. Let’s say if the organisation has a need for: AI Training: Batch processing for model training benefits from cool storage ($0.01/GB/month) for historical training data, while active experiments use hot storage ($0.019/GB/month) for faster access.

Real-time AI benefits: Production models require low-latency access, justifying hot storage costs but benefiting from predictable transaction pricing starting at $0.0228 per 10,000 operations.

Organizations can achieve cost reduction in AI operations through:

The journey continues with new capabilities that offer even smarter automation:

These developments will further support AI potential, allowing organizations of any scale to leverage the power of smart data lakes for competitiveness.

About Author

Khaleesi of Data

Commanding chaos, one dataset at a time!